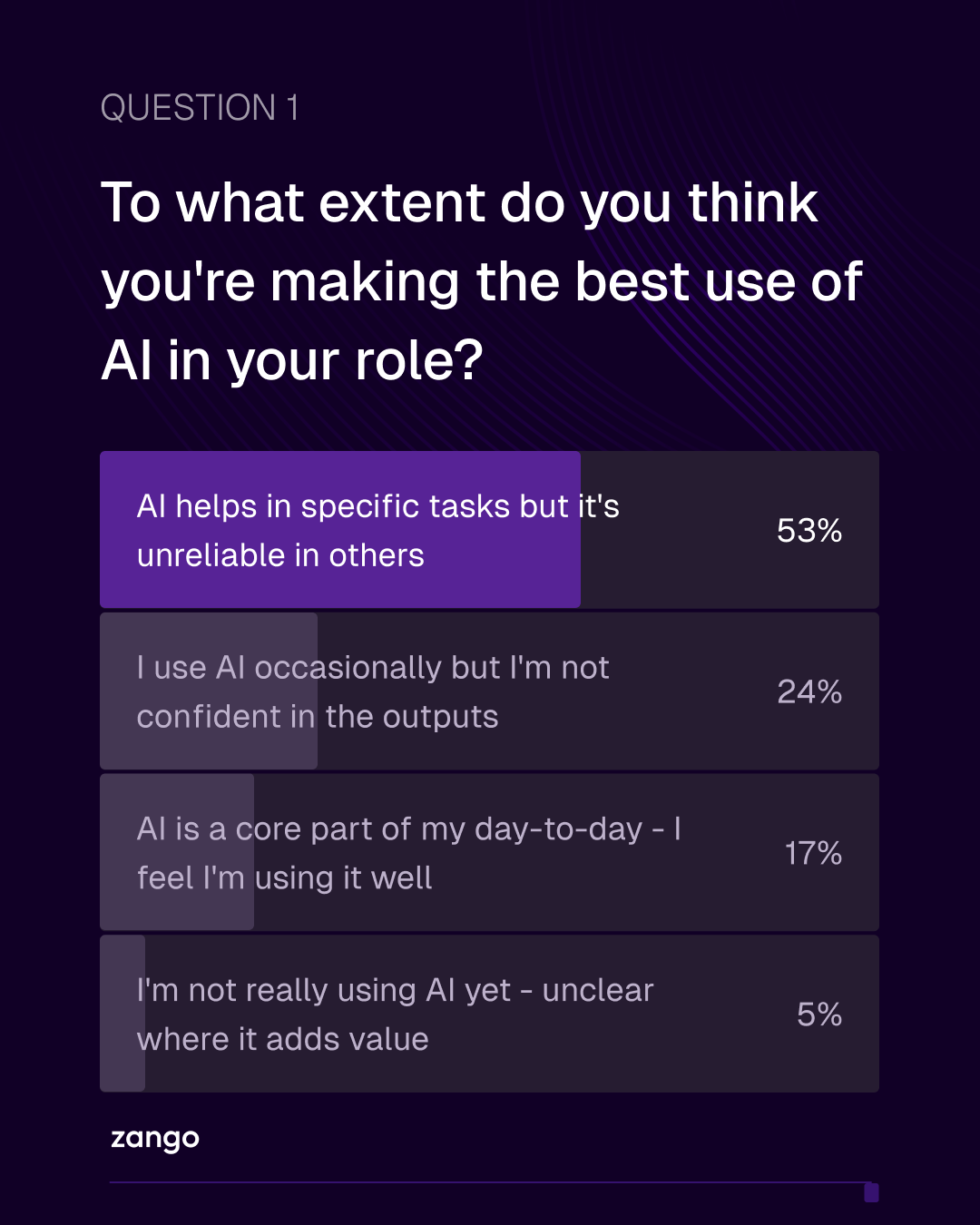

In our recent masterclass on “How to prompt like an AI engineer for compliance”, we asked a series of questions which shone a light on AI use in compliance teams across financial services.

AI adoption is rising - but remains highly “task-bound”

Most compliance and risk professionals now use AI in some form, but usage remains cautious. It's focused on isolated tasks such as summarisation, key-point extraction and redrafting. Very few teams are applying AI across end-to-end workflows or embedding it into the core operating processes that drive compliance work.

When confidence in accuracy is low, firms may be happy to experiment, but live deployment is risky. As a result, teams restrict AI to tightly scoped activities. This helps to explain why only 5% of AI pilots make it to production.

The biggest blocker to adoption is trust

Our polling shows that confidence in output accuracy is the single biggest barrier to adoption in financial services.

Trust, in this context, does not mean assuming AI outputs are correct. It means teams having the ability to evaluate outputs consistently - to know when an output is reliable and when intervention is needed. Institutions cannot scale AI responsibly without that capability.

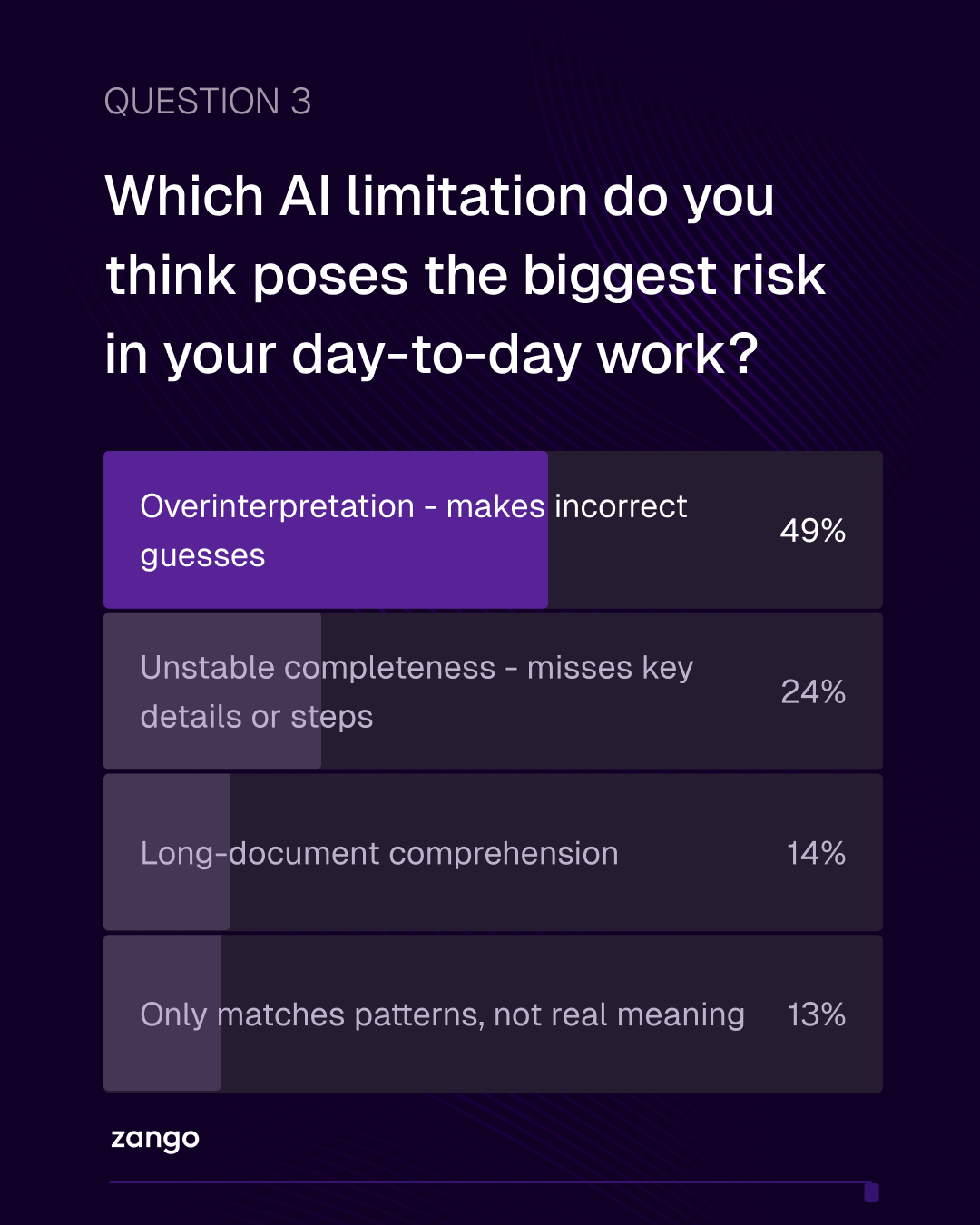

The top risk is overinterpretation

When asked which AI limitation concerns them most, compliance professionals consistently highlighted overinterpretation - where a model finds meaningful patterns in data that aren't actually there.

Overinterpretation errors are particularly challenging for compliance teams because:

- they often sound confident and coherent;

- they may pass unnoticed without deliberate evaluation;

- they can introduce regulatory and operational risk if integrated into workflows.

General-purpose AI models are particularly prone to this when dealing with ambiguous or complex regulatory language, leading to incorrect definitions being extracted from a regulatory text, for example.

While accuracy rates can be significantly improved with domain-specific AI systems (i.e. specialised AI for compliance, trained on regulatory texts in post-training), these risks cannot be mitigated entirely through the process of fine-tuning.

The capability gap: evaluating AI outputs

Together, the evidence points to a specific and addressable capability gap: the ability to systematically evaluate AI outputs across compliance workflows.

There is a growing body of practical techniques - such as structured prompting, counterfactual testing, consistency checks, source-anchored evaluation and governed review workflows - that allow teams to understand:

- where AI can be used reliably; and

- where outputs require deeper scrutiny or human judgement.

Without these skills, teams will never be able to trust AI outputs. And as enterprise AI shifts from ad hoc task automation to embedding AI across workflows, these risks compound.

Closing the gap

To help close the trust gap, our upcoming masterclass - “AI for compliance: spotting rogue AI outputs” - focuses on the evaluation skills needed for responsible AI adoption.

If these capabilities aren’t developed, firms risk both failing to capture the benefits or AI, or introducing errors into regulated workflows that could have significant impact - both for financial institutions, and the customers that rely on them.