Could you briefly describe your role and how it connects AI and compliance?

My role, as a senior compliance manager, is to bridge compliance and technology - ensuring that digitalization, automation, and AI tools are used responsibly and meet regulatory standards.

I oversee complex programmes that drive the digital transformation of compliance processes, helping to implement and govern solutions that increase efficiency and consistency. This includes setting policies for ethical AI, validating models, and establishing controls and oversight mechanisms so that technology - whether automation or AI - is used responsibly, ethically, and in line with applicable laws.

Looking ahead over the next few years, where do you expect AI use to expand the most in financial services?

AI’s role in compliance will grow from experimental to indispensable. There are still challenges to solve, but the direction of travel is clear. Predictive analytics can help forecast risks, while generative AI can assist with drafting compliance documents, policies, and first versions of incident reports or regulatory responses, which compliance teams can then refine.

Over time, we may also see more autonomous compliance capabilities handling lower-risk tasks. The opportunity is to make compliance more real-time and proactive - preventing issues rather than identifying them after the fact. The key challenge remains explainability, as complex models can still behave like a black box.

How is your organisation thinking about AI adoption in compliance?

We are supportive of adoption and see this as a critical moment for the industry. Financial services face strong market pressure, and maintaining sustainable returns means improving productivity. A large proportion of operational cost sits in manual processes, so using automation responsibly is an important lever to improve efficiency.

Can you share a practical example of where AI has already improved a compliance process?

We are still at an early stage, but one practical example is gifts and hospitality. When an employee receives a gift, we need to assess whether it creates a conflict of interest. We now use an AI-enabled, end-to-end process that supports this assessment, applies policy thresholds, and generates documentation for review and escalation.

Previously, this relied heavily on email and manual follow-up. Automating it has reduced administrative effort and made the process more consistent and auditable.

Are there other areas where you see technology having strong potential in compliance?

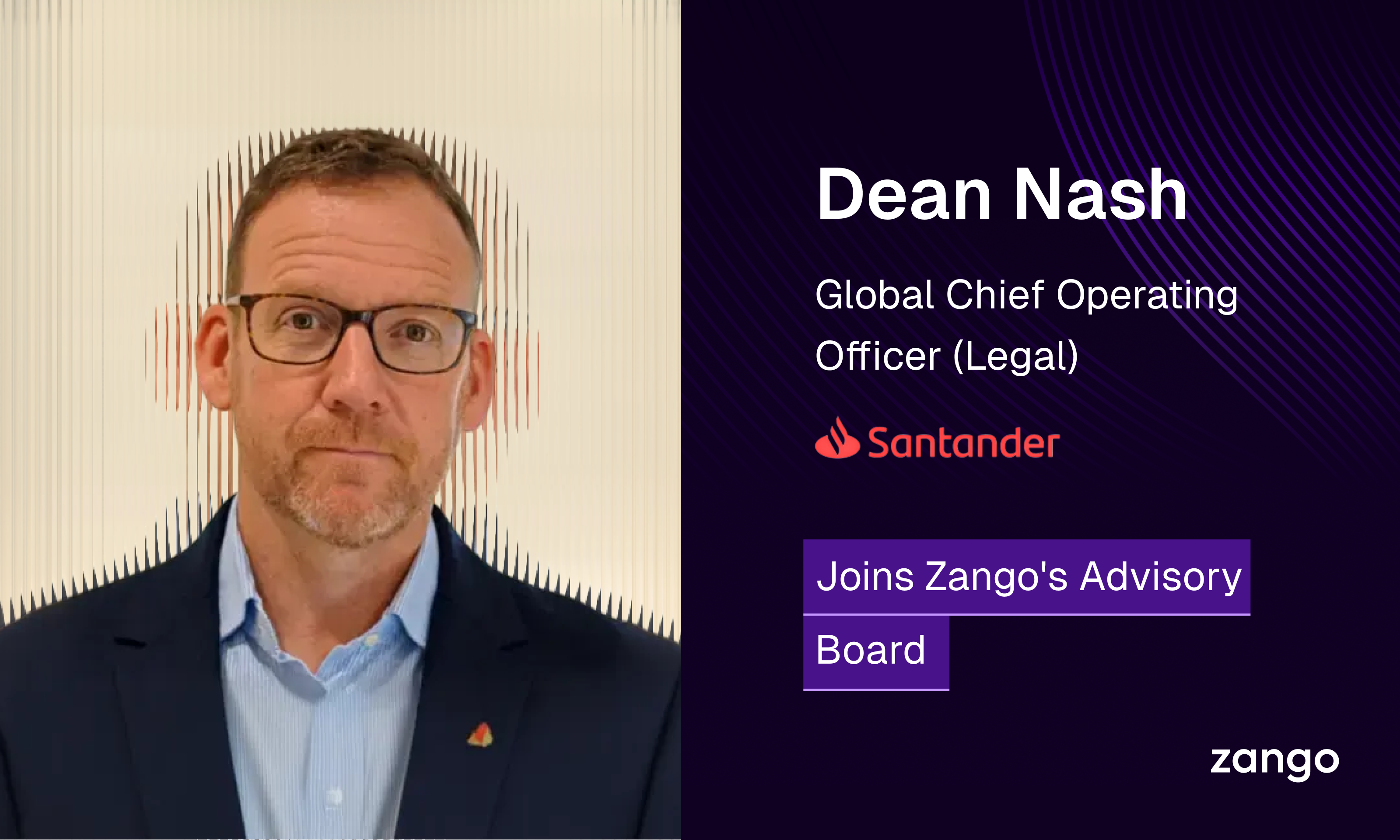

Yes. We see strong potential in tools that help address internal policies and check alignment against internal rules. Solutions like Zango’s can be a real lever here - supporting policy analysis and helping teams assess requirements more efficiently. We believe this is the right direction, with no doubts, particularly as policy complexity continues to increase.

How does your organisation’s approach compare to what you see elsewhere in the sector?

There is strong senior support for automation and AI-enabled transformation. Like many established institutions, we are balancing innovation with existing systems and processes. The focus is on keeping pace with the direction of travel across the industry, strengthening risk analysis, and modernising processes in a controlled way to remain competitive.

What skills or capabilities will organisations need more of to govern AI effectively?

Common standards and best practices will be essential, alongside close collaboration between industry and regulators. Initiatives such as sandboxes and pilot programmes allow innovation under supervision and help shape smarter regulation.

Explainability remains a major challenge, particularly when engaging with regulators. Alongside this, investment in talent and culture is critical. Compliance teams need stronger data and technology literacy so they can engage effectively with AI systems, interpret outputs, and explain decisions clearly. Ethics, resilience, and strong fail-safes are also key to building trust.

How well do existing governance frameworks fit the AI systems now being used?

Traditional governance frameworks - risk management, internal controls, and model governance - still apply. However, for generative AI and more advanced agent-based systems, additional controls are needed, such as enhanced ethics reviews and stricter human oversight.

We also need to extend familiar concepts like audit trails, change management, and continuous monitoring to AI models that evolve over time. Regulators will continue to expect transparency and accountability, and the use of AI will not be an excuse for failures.

How do you personally feel about the changes AI may bring?

I’m very positive. As an engineer by background, I’ve seen how technology has evolved, and AI represents a major opportunity. In areas like transaction monitoring and communications screening, machine learning can operate at a speed and scale that simply isn’t possible for humans alone.

Data volumes are growing exponentially, and AI allows teams to focus their expertise where it matters most - reducing manual workload, lowering false positives, improving detection, and providing earlier insight into emerging risks. Used correctly, it augments teams rather than replacing them.

That said, culture and skills remain the biggest challenges. Change management is essential.

What advice would you give to compliance professionals starting their AI journey today?

Don’t resist it. Start with proof-of-concepts in a controlled environment, using masked data. Once you see the value, scale responsibly. With increasingly complex regulation and growing data volumes, there is no realistic alternative to using these technologies. The expected value is greater efficiency, accuracy, and stronger support for compliance teams.

.png)